[ad_1]

How can we make a robotic study in the true world whereas making certain security? In this work, we present the way it’s potential to face this drawback. The important thing concept to take advantage of area information and use the constraint definition to our benefit. Following our method, it’s potential to implement studying robotic brokers that may discover and study in an arbitrary setting whereas making certain security on the identical time.

Security and studying in robots

Security is a basic characteristic in real-world robotics functions: robots shouldn’t trigger injury to the setting, to themselves, they usually should guarantee the security of individuals working round them. To make sure security after we deploy a brand new software, we wish to keep away from constraint violation at any time. These stringent security constraints are tough to implement in a reinforcement studying setting. That is the rationale why it’s laborious to deploy studying brokers in the true world. Classical reinforcement studying brokers use random exploration, comparable to Gaussian insurance policies, to behave within the setting and extract helpful information to enhance activity efficiency. Nonetheless, random exploration could trigger constraint violations. These constraint violations should be prevented in any respect prices in robotic platforms, as they typically end in a significant system failure.

Whereas the robotic framework is difficult, it’s also a really well-known and well-studied drawback: thus, we are able to exploit some key outcomes and information from the sphere. Certainly, typically a robotic’s kinematics and dynamics are identified and might be exploited by the training techniques. Additionally, bodily constraints e.g., avoiding collisions and imposing joint limits, might be written in analytical kind. All this data might be exploited by the training robotic.

Our method

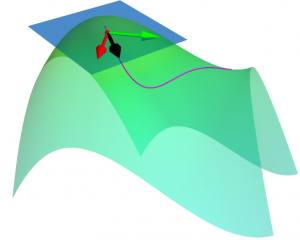

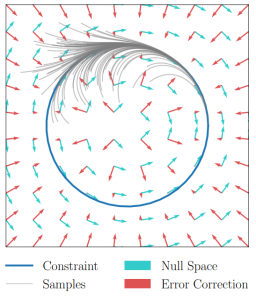

Many reinforcement studying approaches attempt to clear up the security drawback by incorporating the constraint data within the studying course of. This method typically leads to slower studying performances, whereas not having the ability to guarantee security throughout the entire studying course of. As a substitute, we current a novel perspective to the issue, introducing ATACOM (Performing on the TAngent house of the COnstraint Manifold). Completely different from different state-of-the-art approaches, ATACOM tries to create a protected motion house wherein each motion is inherently protected. To take action, we have to assemble the constraint manifold and exploit the fundamental area information of the agent. As soon as we have now the constraint manifold, we outline our motion house because the tangent house to the constraint manifold.

We will assemble the constraint manifold utilizing arbitrary differentiable constraints. The one requirement is that the constraint perform should rely solely on controllable variables i.e. the variables that we are able to immediately management with our management motion. An instance may very well be the robotic joint positions and velocities.

We will assist each equality and inequality constraints. Inequality constraints are notably vital as they can be utilized to keep away from particular areas of the state house or to implement the joint limits. Nonetheless, they don’t outline a manifold. To acquire a manifold, we rework the inequality constraints into equality constraints by introducing slack variables.

With ATACOM, we are able to guarantee security by taking motion on the tangent house of the constraint manifold. An intuitive solution to see why that is true is to contemplate the movement on the floor of a sphere: any level with a velocity tangent to the sphere itself will hold transferring on the floor of the sphere. The identical concept might be prolonged to extra complicated robotic techniques, contemplating the acceleration of system variables (or the generalized coordinates, when contemplating a mechanical system) as an alternative of velocities.

The above-mentioned framework solely works if we take into account continuous-time techniques, when the management motion is the instantaneous velocity or acceleration. Sadly, the overwhelming majority of robotic controllers and reinforcement studying approaches are discrete-time digital controllers. Thus, even taking the tangent route of the constraint manifold will end in a constraint violation. It’s all the time potential to scale back the violations by rising the management frequency. Nonetheless, error accumulates over time, inflicting a drift from the constraint manifold. To resolve this difficulty, we introduce an error correction time period that ensures that the system stays on the reward manifold. In our work, we implement this time period as a easy proportional controller.

Lastly, many robotics techniques can’t be managed immediately by velocity or accelerations. Nonetheless, if an inverse dynamics mannequin or a monitoring controller is obtainable, we are able to use it and compute the proper management motion.

Outcomes

We tried ATACOM on a simulated air hockey activity. We use two several types of robots. The primary one is a planar robotic. On this activity, we implement joint velocities and we keep away from the collision of the end-effector with desk boundaries.

The second robotic is a Kuka Iiwa 14 arm. On this state of affairs, we constrained the end-effector to maneuver on the planar floor and we guarantee no collision will happen between the robotic arm and the desk.

In each experiments, we are able to study a protected coverage utilizing the Comfortable Actor-Critic algorithm as a studying algorithm together with the ATACOM framework. With our method, we’re capable of study good insurance policies quick and we are able to guarantee low constraint violations at any timestep. Sadly, the constraint violation can’t be zero resulting from discretization, however it may be lowered to be arbitrarily small. This isn’t a significant difficulty in real-world techniques, as they’re affected by noisy measurements and non-ideal actuation.

Is the security drawback solved now?

The important thing query to ask is that if we are able to guarantee any security ensures with ATACOM. Sadly, this isn’t true typically. What we are able to implement are state constraints at every timestep. This features a vast class of constraints, comparable to mounted impediment avoidance, joint limits, floor constraints. We will lengthen our technique to constraints contemplating not (immediately) controllable variables. Whereas we are able to guarantee security to a sure extent additionally on this state of affairs, we can not make sure that the constraint violation is not going to be violated throughout the entire trajectory. Certainly, if the not controllable variables act in an adversarial method, they could discover a long-term technique to trigger constraint violation in the long run. A straightforward instance is a prey-predator state of affairs: even when we make sure that the prey avoids every predator, a gaggle of predators can carry out a high-level technique and entice the agent in the long run.

Thus, with ATACOM we are able to guarantee security at a step stage, however we aren’t ready to make sure long-term security, which requires reasoning at trajectory stage. To make sure this sort of security, extra superior strategies might be wanted.

Discover out extra

The authors have been finest paper award finalists at CoRL this 12 months, for his or her work: Robotic reinforcement studying on the constraint manifold.

- Learn the paper.

- The GitHub web page for the work is right here.

- Learn extra in regards to the successful and shortlisted papers for the CoRL awards right here.

tags: c-Analysis-Innovation

Puze Liu

is a PhD pupil within the Clever Autonomous Methods Group, Technical College Darmstadt

Puze Liu

is a PhD pupil within the Clever Autonomous Methods Group, Technical College Darmstadt

Davide Tateo

is a Postdoctoral Researcher on the Clever Autonomous Methods Laboratory within the Pc Science Division of the Technical College of Darmstadt

Davide Tateo

is a Postdoctoral Researcher on the Clever Autonomous Methods Laboratory within the Pc Science Division of the Technical College of Darmstadt

Haitham Bou-Ammar

leads the reinforcement studying group at Huawei applied sciences Analysis & Improvement UK and is an Honorary Lecturer at UCL

Haitham Bou-Ammar

leads the reinforcement studying group at Huawei applied sciences Analysis & Improvement UK and is an Honorary Lecturer at UCL

Jan Peters

is a full professor for Clever Autonomous Methods on the Technische Universitaet Darmstadt and a senior analysis scientist on the MPI for Clever Methods

Jan Peters

is a full professor for Clever Autonomous Methods on the Technische Universitaet Darmstadt and a senior analysis scientist on the MPI for Clever Methods

[ad_2]