[ad_1]

MLflow is an open supply platform that was developed to handle the ML lifecycle, together with experimentation, reproducibility, deployment, and a central mannequin registry. It integrates with many widespread ML libraries resembling scikit-learn, XGBoost, TensorFlow, and PyTorch to help a broad vary of use circumstances. Databricks presents a various computing setting with a variety of pre-installed libraries, together with MLflow, that enable prospects to develop fashions with out having to fret about dependency administration. For instance, the desk under exhibits which XGBoost model is pre-installed in numerous Databricks Runtime for Machine Studying (MLR) environments:

|

MLR model |

Pre-installed XGBoost model |

|---|---|

|

10.3 |

1.5.1 |

|

10.2 |

1.5.0 |

|

10.1 |

1.4.2 |

As we will see, completely different MLR environments present completely different library variations. Moreover, customers usually need to improve libraries to strive new options. This vary of variations poses a big compatibility problem and requires a complete testing technique. Testing MLflow solely towards one particular model (as an illustration, solely the newest model) is inadequate; we have to take a look at MLflow towards a spread of ML library variations that customers generally leverage. One other problem is that ML libraries are continuously evolving and releasing new variations which can include breaking adjustments which are incompatible with the integrations MLflow gives (as an illustration, elimination of an API that MLflow depends on for mannequin serialization). We need to detect such breaking adjustments as early as potential, ideally even earlier than they’re shipped in a brand new model launch. To deal with these challenges, we have now applied cross-version testing.

What’s cross-version testing?

Cross-version testing is a testing technique we applied to make sure that MLflow is appropriate with many variations of widely-used ML libraries (e.g. scikit-learn 1.0 and TensorFlow 2.6.3).

Testing construction

We applied cross-version testing utilizing GitHub Actions that set off mechanically every day, in addition to when a related pull request is filed. A take a look at workflow mechanically identifies a matrix of variations to check for every of MLflow’s library integrations, making a separate job for each. Every of those jobs runs a group of checks which are related to the ML library.

Configuration File

We configure cross-version testing as code utilizing a YAML file that appears like under.

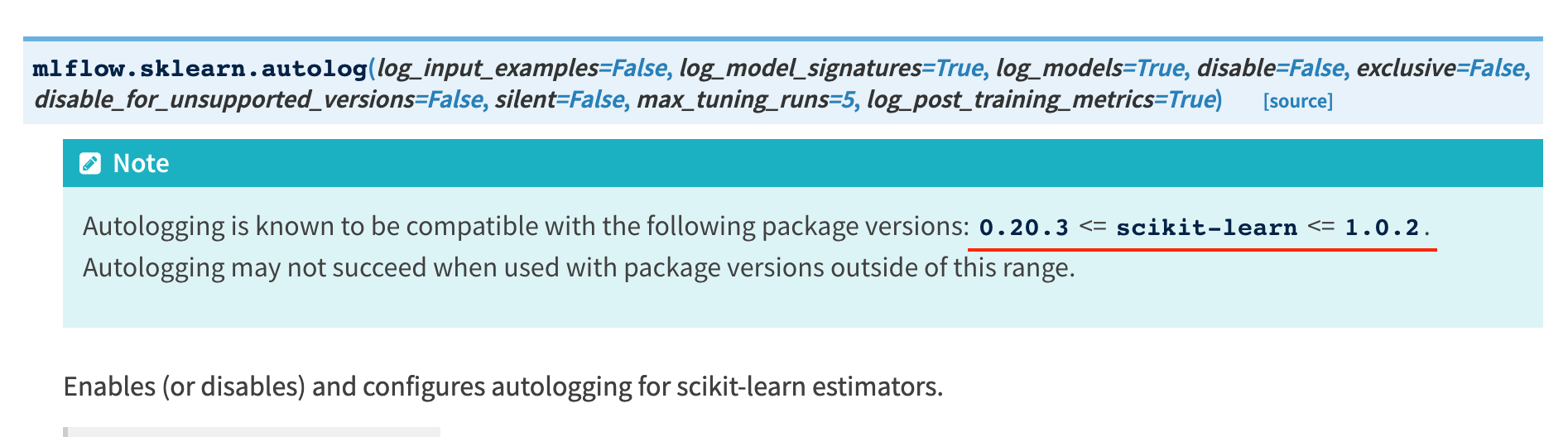

# Integration title sklearn: package_info: # Bundle this integration relies on pip_release: "scikit-learn" # Command to put in the prerelease model of the package deal install_dev: | pip set up git+https://github.com/scikit-learn/scikit-learn.git # Check class. Could be one among ["models", "autologging"] # "fashions" means checks for mannequin serialization and serving # "autologging" means checks for autologging autologging: # Further necessities to run checks # `>= 24.0: ["matplotlib"]` means "Set up matplotlib # if scikit-learn model is >= 0.24.0" necessities: ">= 0.24.0": ["matplotlib"] # Variations that shouldn't be supported attributable to unacceptable points unsupported: ["0.22.1"] # Minimal supported model minimal: "0.20.3" # Most supported model most: "1.0.2" # Command to run checks run: | pytest checks/sklearn/autologging xgboost: ...One of many outcomes of cross-version testing is that MLflow can clearly doc which ML library variations it helps and warn customers when an put in library model is unsupported. For instance, the documentation for the mlflow.sklearn.autolog API gives a spread of appropriate scikit-learn variations:

Discuss with this documentation of the mlflow.sklearn.autolog API for additional studying.

Subsequent, let’s check out how the unsupported model warning characteristic works. Within the Python script under, we patch sklearn.__version__ with 0.20.2, which is older than the minimal supported model 0.20.3 to display the characteristic, after which name mlflow.sklearn.autolog

from unittest import mock import mlflow # Assume scikit-learn 0.20.2 is put in with mock.patch("sklearn.__version__", "0.20.2"): mlflow.sklearn.autolog()The script above prints out the next message to warn the person that the unsupported model of scikit-learn (0.20.2) is getting used and autologging could not work correctly:

2022/01/21 16:05:50 WARNING mlflow.utils.autologging_utils: You're utilizing an unsupported model of sklearn. In case you encounter errors throughout autologging, strive upgrading / downgrading sklearn to a supported model, or strive upgrading MLflow.Operating checks

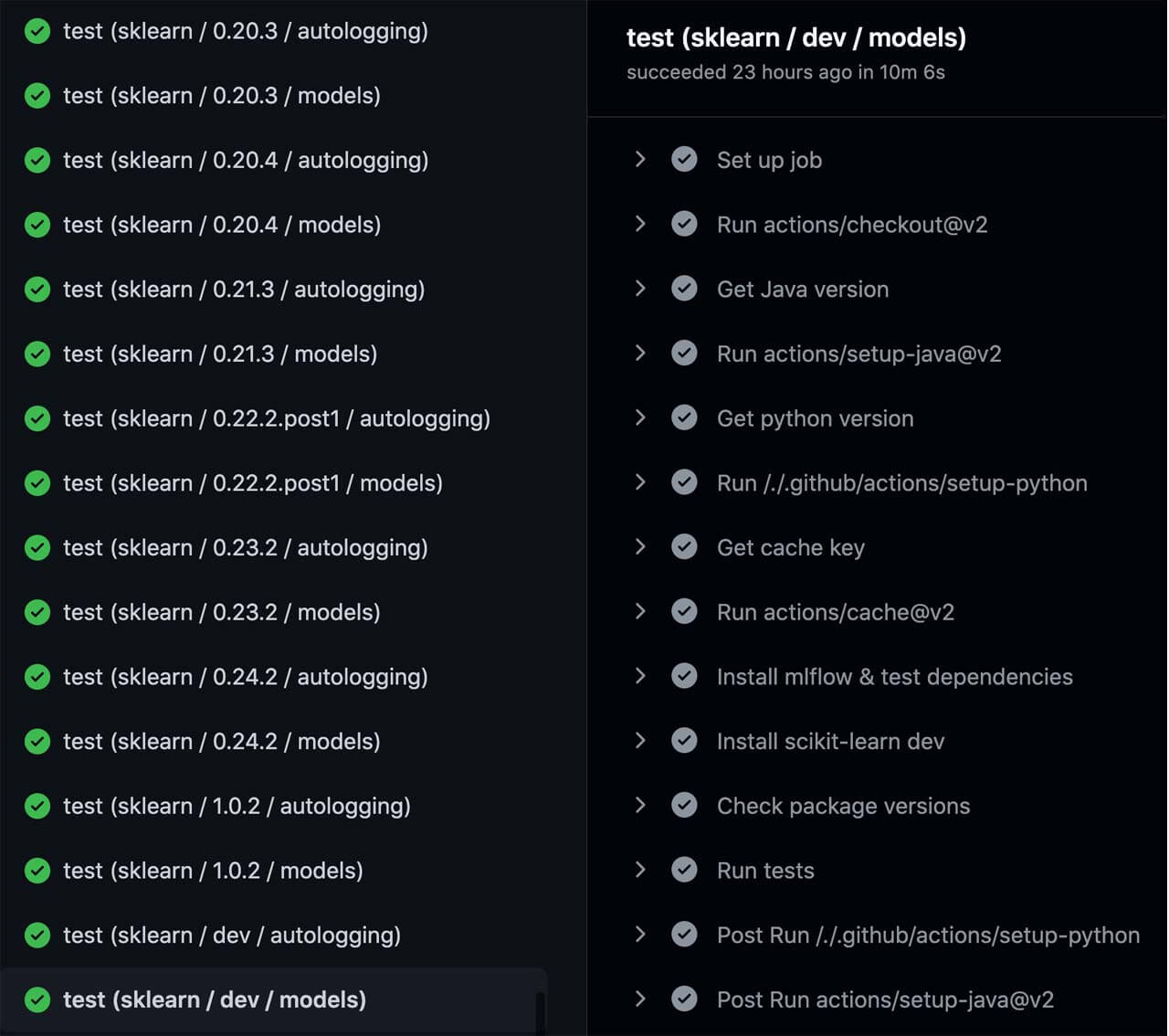

Now that we have now a testing construction, let’s run the checks. To start out, we created a GitHub Actions workflow that constructs a testing matrix from the configuration file and runs every merchandise within the matrix as a separate job in parallel. An instance of the GitHub Actions workflow abstract for scikit-learn cross-version testing is proven under. Primarily based on the configuration, we have now a minimal model “0.20.3”, which is proven on the prime. We populate all variations that exist between that minimal model and the utmost model “1.0.2”. On the backside, you’ll be able to see the addition of 1 last take a look at: the “dev” model, which represents a prerelease model of scikit-learn put in from the principle growth department in scikit-learn/scikit-learn by way of the command specified within the install_dev discipline. We’ll clarify the goal of this prerelease model testing within the “Testing the long run” part later.

Which variations to check

To restrict the variety of GitHub Actions runs, we solely take a look at the newest micro model in every minor model. For example, if “1.0.0”, “1.0.1”, and “1.0.2” can be found, we solely take a look at “1.0.2”. The reasoning behind this method is that most individuals don’t explicitly set up an previous minor model of a significant launch, and the newest minor model of a significant model is often probably the most bug-free. The desk under exhibits which variations we take a look at for scikit-learn.

scikit-learn model

Examined

0.20.3

✅

0.20.4

✅

0.21.0

0.21.1

0.21.2

0.21.3

✅

0.22

0.22.1

0.22.2

0.22.2.post1

✅

0.23.0

0.23.1

0.23.2

✅

0.24.0

0.24.1

0.24.2

✅

1.0

1.0.1

1.0.2

✅

dev

✅

When to set off cross-version testing

There are two occasions that set off cross-version testing:

- When a related pull request is filed. For example, if we file a PR that updates recordsdata beneath the mlflow/sklearn listing, the cross-version testing workflow triggers jobs for scikit-learn to ensure that code adjustments within the PR are appropriate with all supported scikit-learn variations.

- A every day cron job the place we run all cross-version testing jobs together with ones for prerelease variations. We test the standing of this cron job each working day to catch points as early as potential.

Testing the long run

In cross-version testing, we run every day checks towards each publicly accessible variations and prerelease variations put in from on the principle growth department for all dependent libraries which are utilized by MLflow. This permits us to foretell what is going to occur to MLflow sooner or later.

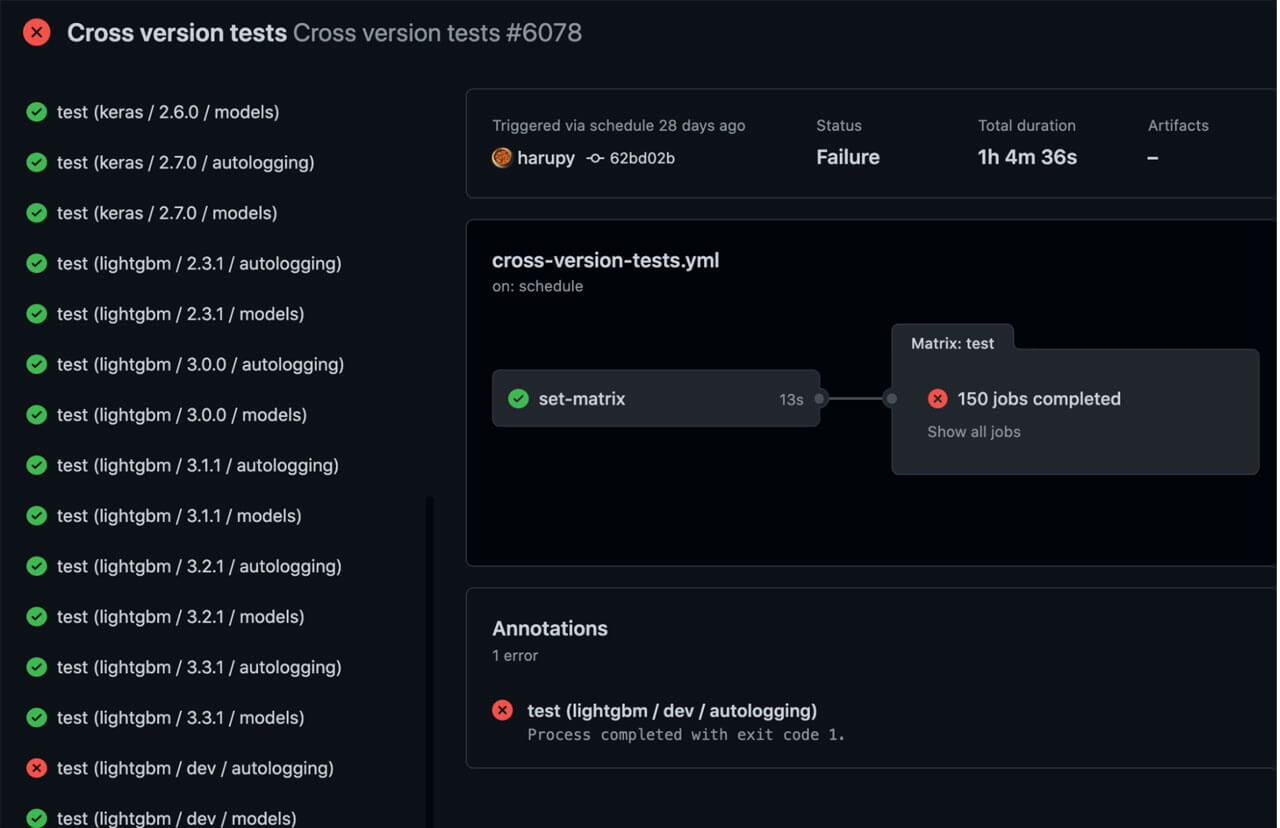

Let’s check out an actual state of affairs that the MLflow maintainers just lately dealt with:

- On 2021/12/26, LightGBM eliminated a number of deprecated perform arguments in microsoft/LightGBM#4908. This modification broke MLflow’s autologging integration for LightGBM.

- On 2021/12/27, we discovered one among cross-version take a look at runs for LightGBM failed and recognized microsoft/LightGBM#4908 as the foundation trigger.

- On 2021/12/28, we filed a PR to repair this problem: mlflow/mlflow#5206

- On 2021/12/31, we merged the PR.

- On 2022/01/08, LightGBM 3.3.2 was launched, containing the breaking change.

| ├─ 2021/12/26 microsoft/LightGBM#4908 (breaking change) was merged. ├─ 2021/12/27 Discovered LightGBM take a look at failure ├─ 2021/12/28 Filed mlflow/mlflow#5206 | ├─ 2021/12/31 Merged mlflow/mlflow#5206. | | ├─ 2022/01/08 LightGBM 3.3.2 launch | | ├─ 2022/01/17 MLflow 1.23.0 launch | v timeDue to prerelease model testing, we had been in a position to uncover the breaking change the day after, it was merged and rapidly apply a patch for it even earlier than the LightGBM 3.3.2 launch. This proactive work, dealt with forward of time and on a less-urgent schedule, allowed us to be ready for his or her new launch and keep away from breaking adjustments or regressions.

If we didn’t carry out prerelease model testing, we might have solely found the breaking change after the LightGBM 3.3.2 launch, which might have resulted in a damaged person expertise relying on the LightGBM launch date. For instance, think about the problematic state of affairs under the place LightGBM was launched after MLflow with out prerelease model testing. Customers operating LightGBM 3.3.2 and MLflow 1.23.0 would have encountered bugs.

| ├─ 2021/12/26 microsoft/LightGBM #4908 (breaking change) was merged. | | ├─ 2022/01/17 MLflow 1.23.0 launch | ├─ 2022/01/20 (hypothetical) LightGBM 3.3.2 launch ├─ 2022/01/21 Customers operating LightGBM 3.3.2 and MLflow 1.23.0 | would have encountered bugs. | v timeConclusion

On this weblog put up, we coated:

- Why we applied cross-version testing.

- How we configure and run cross-version testing.

- How we improve the MLflow person expertise and documentation utilizing the cross-version testing outcomes.

Try this README file for additional studying on the implementation of cross-version testing. We hope this weblog put up will assist different open-source tasks that present integrations for a lot of ML libraries.

[ad_2]