[ad_1]

Deep Studying might be the most well-liked type of machine studying right now. Though not each drawback boils all the way down to a deep studying mannequin, in domains equivalent to pc imaginative and prescient and pure language processing deep studying is prevalent.

A key subject with deep studying fashions, nonetheless, is that they’re useful resource hungry. They require plenty of knowledge and compute to coach, and many compute to function. As a rule, GPUs are identified to carry out higher than CPUs for each coaching and inference, whereas some fashions cannot run on CPUs in any respect. Now Deci desires to alter that.

Deci, an organization aiming to optimize deep studying fashions, is releasing a brand new household of fashions for picture classification. These fashions outperform well-known options in each accuracy and runtime, and may run on the favored Intel Cascade Lake CPUs.

We caught up with Deci CEO and co-founder Yonatan Geifman to debate Deci’s strategy and right this moment’s launch.

Deep studying efficiency entails trade-offs

Deci was cofounded by Geifman, Jonathan Elial, and Ran El-Yaniv in 2019. All founders have a background in machine studying, with Geifman and El-Yaniv additionally having labored at Google. They’d the possibility to expertise first hand how arduous getting deep studying into manufacturing is.

Deci founders realized that making deep studying fashions extra scalable would assist getting them to run higher in manufacturing environments. In addition they noticed {hardware} corporations making an attempt to construct higher AI chips to run inference at scale.

The guess they took with Deci was to deal with the mannequin design space so as to make deep studying extra scalable and extra environment friendly, thus enabling them to run higher in manufacturing environments. They’re utilizing an automatic strategy to design fashions which might be extra environment friendly of their construction and in how they work together with the underlying {hardware} in manufacturing.

Deci’s proprietary Automated Neural Structure Development (AutoNAC) expertise is used to develop so-called DeciNets. DeciNets are pre-trained fashions that Deci claims outperform identified state-of-the-art fashions when it comes to accuracy and runtime efficiency.

With a view to get higher accuracy in deep studying, you possibly can take bigger fashions and practice them for a little bit bit extra time with a little bit bit extra knowledge and you’ll get higher outcomes, Geifman mentioned. Doing that, nonetheless, generates bigger fashions, that are extra useful resource intensive to run in manufacturing. What Deci is promising is fixing that optimization drawback, by offering a platform and instruments to construct fashions which might be each correct and quick in manufacturing.

Fixing that optimization drawback requires greater than guide tweaking of current neural structure, and AutoNAC can design specialised fashions for specialised use instances, Geifman mentioned. This implies being conscious of the info and the machine studying duties at hand, whereas additionally being conscious of the {hardware} the mannequin will probably be deployed on.

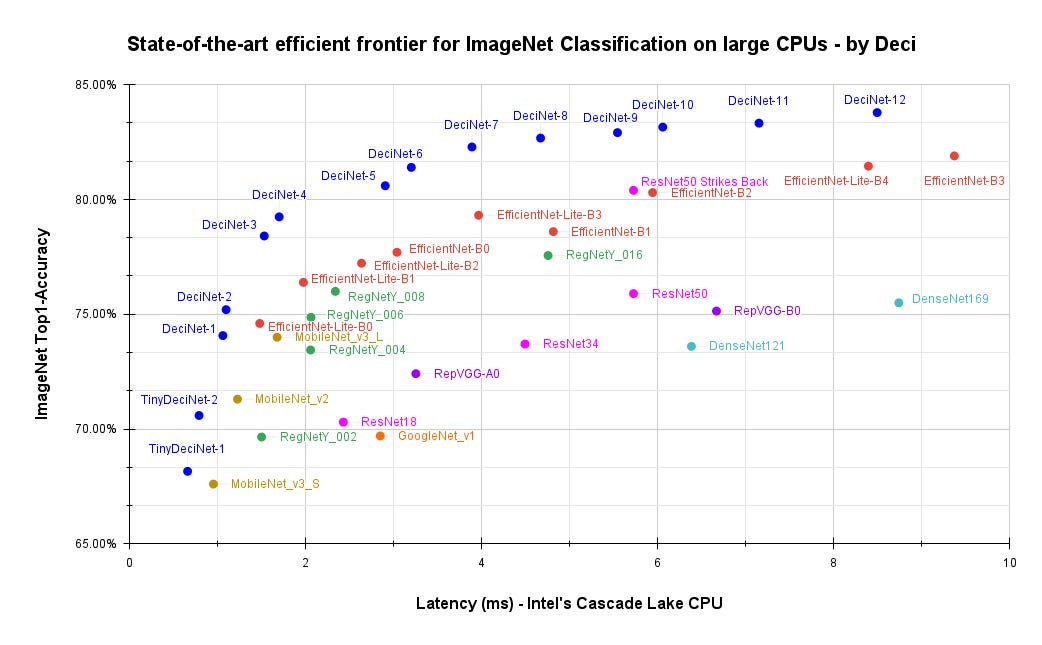

Efficiency of DeciNets for picture classification in comparison with different deep studying picture classification fashions for Intel Cascade Lake CPUs. Picture: Deci

The DeciNets introduced right this moment are geared for picture classification on Intel Cascade Lake CPUs, which as Geifman famous are a well-liked selection in lots of cloud situations. Deci dubs these fashions “industry-leading”, primarily based on some benchmarks which Geifman mentioned will probably be launched for third events to have the ability to replicate.

There are three primary duties in pc imaginative and prescient: picture classification, object detection, and semantic segmentation. Geifman mentioned Deci produces a number of sorts of DeciNets for every process. Every DeciNet goals at a special degree of efficiency, outlined because the trade-off between accuracy and latency.

Within the outcomes Deci launched, variations of DeciNet with completely different ranges of complexity (i.e. variety of parameters) are in contrast in opposition to variations of different picture classification fashions equivalent to Google’s EfficientNet and the {industry} normal ResNet.

In line with Geifman, Deci has dozens of fashions pre-optimized for patrons to make use of in a totally self served providing, starting from varied pc imaginative and prescient duties to NLP duties on any sort of {hardware} to be deployed in manufacturing.

The deep studying inference stack

Nevertheless, there’s a catch right here. Since DeciNets are pre-trained, which means that they will not essentially carry out as wanted for a buyer’s particular use case and knowledge. Subsequently, after selecting the DeciNet that has the optimum accuracy / latency tradeoff for the use case’s necessities, customers have to effective tune it for his or her knowledge.

Subsequently, an optimization section follows, during which the skilled mannequin is compiled and quantized with Deci’s platform by way of API or GUI. Lastly, the mannequin may be deployed leveraging Deci’s deployment instruments Infery & RTiC. This end-to-end protection is a differentiator for Deci, Geifman mentioned. Notably, current fashions will also be ported to DeciNets.

When contemplating the end-to-end lifecycle, economics and tradeoffs play an vital position. The pondering behind Deci’s providing is that coaching fashions, whereas expensive, is definitely less expensive than working fashions in manufacturing. Subsequently, it is smart to deal with producing fashions that price much less to function, whereas having accuracy and latency corresponding to current fashions.

The identical pragmatic strategy is taken when focusing on deployment {hardware}. In some instances, when minimizing latency is the first purpose, the quickest attainable {hardware} will probably be chosen. In different instances, getting lower than optimum latency in trade for lowered price of operation might make sense.

For edge deployments, there might not even be a option to be made: the {hardware} is what it’s, and the mannequin to be deployed ought to be capable to function beneath given constrains. Deci is providing a suggestion and benchmarking software that can be utilized to check latency, throughput and value for varied cloud situations and {hardware} sorts, serving to customers make a selection.

Deci is concerned in a partnership with Intel. Though right this moment’s launch was not accomplished in collaboration with Intel, the advantages for each side are clear. By working with Deci, Intel expands the vary of deep studying fashions that may be deployed on its CPUs. By working with Intel, Deci expands its go to market attain.

Deci is focusing on optimization of deep studying mannequin inference on a wide range of deployment targets. Picture: Deci

As Geifman famous, nonetheless, Deci targets a variety of {hardware}, together with GPUs, FPGAs, and special-purpose ASIC accelerators, and has partnerships in place with the likes of HPE and AWS too. Deci can be engaged on partnerships with varied sorts of {hardware} producers, cloud suppliers and OEMs that promote knowledge facilities and providers for machine studying.

Deci’s strategy is harking back to TinyML, aside from the truth that it targets a broader set of deployment targets. When discussing this subject, Geifman referred to the machine studying inference acceleration stack. In line with this conceptualization, acceleration can occur at completely different layers of the stack.

It could actually occur on the {hardware} layer, by selecting the place to deploy fashions. It could actually occur on the runtime / graph compiler layer, the place we see options offered by {hardware} producers equivalent to Tensor RT by Nvidia or OpenVino by Intel. We even have the ONNX open supply supported by Microsoft, in addition to industrial options equivalent to Apache TVM being commercialized by OctoML.

On high of that, we now have mannequin compression strategies like pooling and quantization, which Geifman famous are are extensively utilized by varied open supply options, with some distributors engaged on commercialization. Geifman framed Deci as engaged on a degree above these, particularly the extent of neural structure search, serving to knowledge scientists design fashions to get higher latency whereas sustaining accuracy.

Deci’s platform gives a Group tier geared toward builders seeking to enhance efficiency and shorten improvement time, in addition to Skilled and Enterprise tiers with extra choices, together with use of DeciNets. The corporate has raised a complete of $30 million in two funding rounds and has 40 workers, largely primarily based in Israel. In line with Geifman, extra DeciNets will probably be launched within the fast future, specializing in NLP purposes.

[ad_2]