[ad_1]

Intro

As options architects, we work carefully with prospects each day to assist them get the very best efficiency out of their jobs on Databricks –and we regularly find yourself giving the identical recommendation. It’s not unusual to have a dialog with a buyer and get double, triple, or much more efficiency with just some tweaks. So what’s the key? How are we doing this? Listed here are the highest 5 issues we see that may make a big impact on the efficiency prospects get from Databricks.

Right here’s a TLDR:

- Use bigger clusters. It could sound apparent, however that is the primary downside we see. It’s truly not any costlier to make use of a big cluster for a workload than it’s to make use of a smaller one. It’s simply sooner. If there’s something it is best to take away from this text, it’s this. Learn part 1. Actually.

- Use Photon, Databricks’ new, super-fast execution engine. Learn part 2 to be taught extra. You received’t remorse it.

- Clear out your configurations. Configurations carried from one Apache Spark™ model to the following could cause large issues. Clear up! Learn part 3 to be taught extra.

- Use Delta Caching. There’s likelihood you’re not utilizing caching appropriately, if in any respect. See Part 4 to be taught extra.

- Concentrate on lazy analysis. If this doesn’t imply something to you and also you’re writing Spark code, leap to part 5.

- Bonus tip! Desk design is tremendous necessary. We’ll go into this in a future weblog, however for now, take a look at the information on Delta Lake finest practices.

1. Give your clusters horsepower!

That is the primary mistake prospects make. Many purchasers create tiny clusters of two employees with 4 cores every, and it takes endlessly to do something. The priority is at all times the identical: they don’t wish to spend an excessive amount of cash on bigger clusters. Right here’s the factor: it’s truly not any costlier to make use of a big cluster for a workload than it’s to make use of a smaller one. It’s simply sooner.

The hot button is that you simply’re renting the cluster for the size of the workload. So, when you spin up that two employee cluster and it takes an hour, you’re paying for these employees for the complete hour. Nonetheless, when you spin up a 4 employee cluster and it takes solely half an hour, the associated fee is definitely the identical! And that pattern continues so long as there’s sufficient work for the cluster to do.

Right here’s a hypothetical situation illustrating the purpose:

| Variety of Staff | Price Per Hour | Size of Workload (hours) | Price of Workload |

|---|---|---|---|

| 1 | $1 | 2 | $2 |

| 2 | $2 | 1 | $2 |

| 4 | $4 | 0.5 | $2 |

| 8 | $8 | 0.25 | $2 |

Discover that the overall price of the workload stays the identical whereas the real-world time it takes for the job to run drops considerably. So, bump up your Databricks cluster specs and velocity up your workloads with out spending any more cash. It will probably’t actually get any less complicated than that.

2. Use Photon

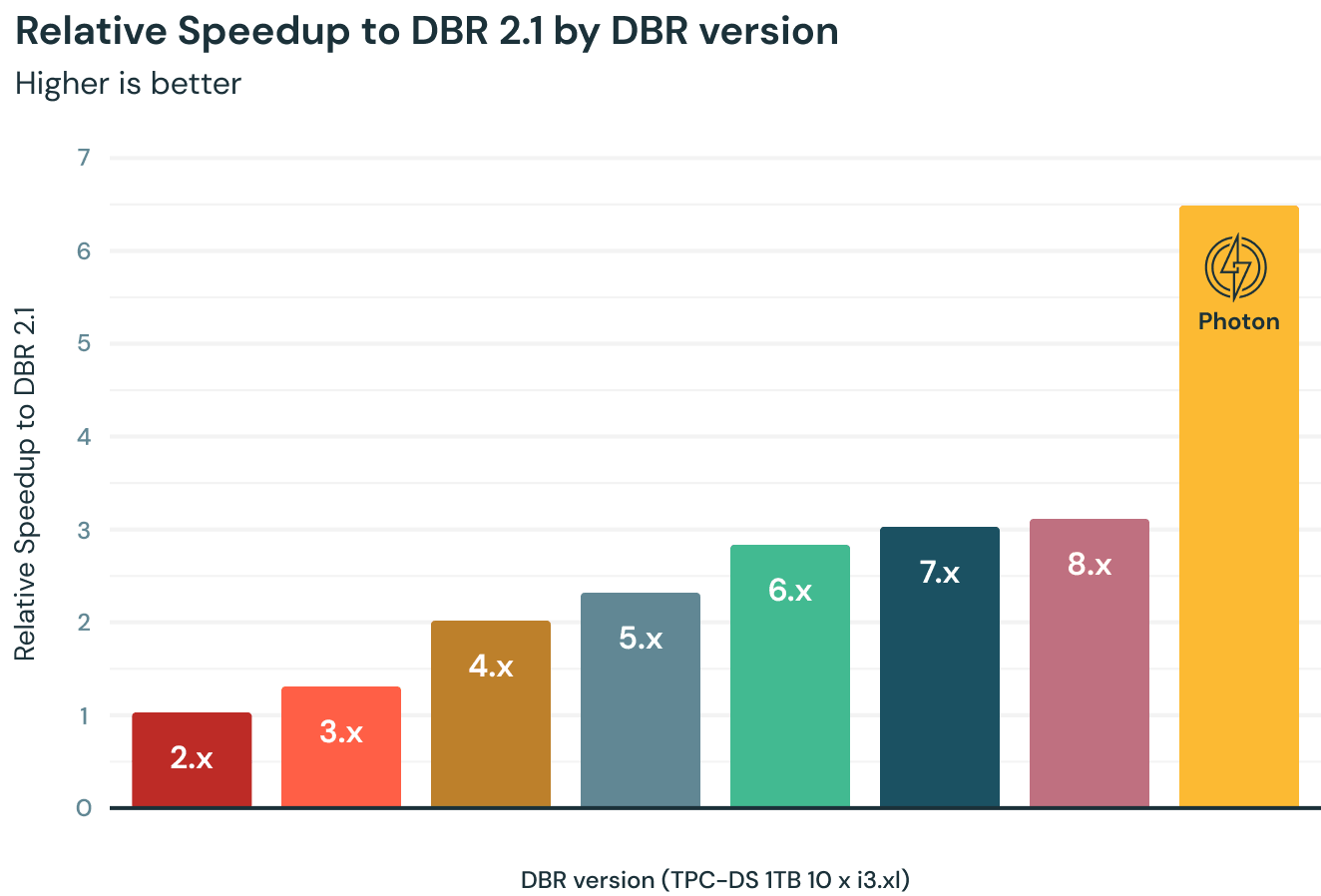

Our colleagues in engineering have rewritten the Spark execution engine in C++ and dubbed it Photon. The outcomes are spectacular!

Past the plain enhancements resulting from operating the engine in native code, they’ve additionally made use of CPU-level efficiency options and higher reminiscence administration. On prime of this, they’ve rewritten the Parquet author in C++. So this makes writing to Parquet and Delta (primarily based on Parquet) tremendous quick as effectively!

However let’s even be clear about what Photon is dashing up. It improves computation velocity for any built-in capabilities or operations, in addition to writes to Parquet or Delta. So joins? Yep! Aggregations? Certain! ETL? Completely! That UDF (user-defined perform) you wrote? Sorry, nevertheless it received’t assist there. The job that’s spending most of its time studying from an historic on-prem database? Gained’t assist there both, sadly.

The excellent news is that it helps the place it may well. So even when a part of your job can’t be sped up, it should velocity up the opposite components. Additionally, most jobs are written with the native operations and spend lots of time writing to Delta, and Photon helps quite a bit there. So give it a attempt. You might be amazed by the outcomes!

3. Clear out previous configurations

You already know these Spark configurations you’ve been carrying alongside from model to model and nobody is aware of what they do anymore? They is probably not innocent. We’ve seen jobs go from operating for hours right down to minutes just by cleansing out previous configurations. There might have been a quirk in a specific model of Spark, a efficiency tweak that has not aged effectively, or one thing pulled off some weblog someplace that by no means actually made sense. On the very least, it’s price revisiting your Spark configurations when you’re on this scenario. Typically the default configurations are the very best, they usually’re solely getting higher. Your configurations could also be holding you again.

4. The Delta Cache is your pal

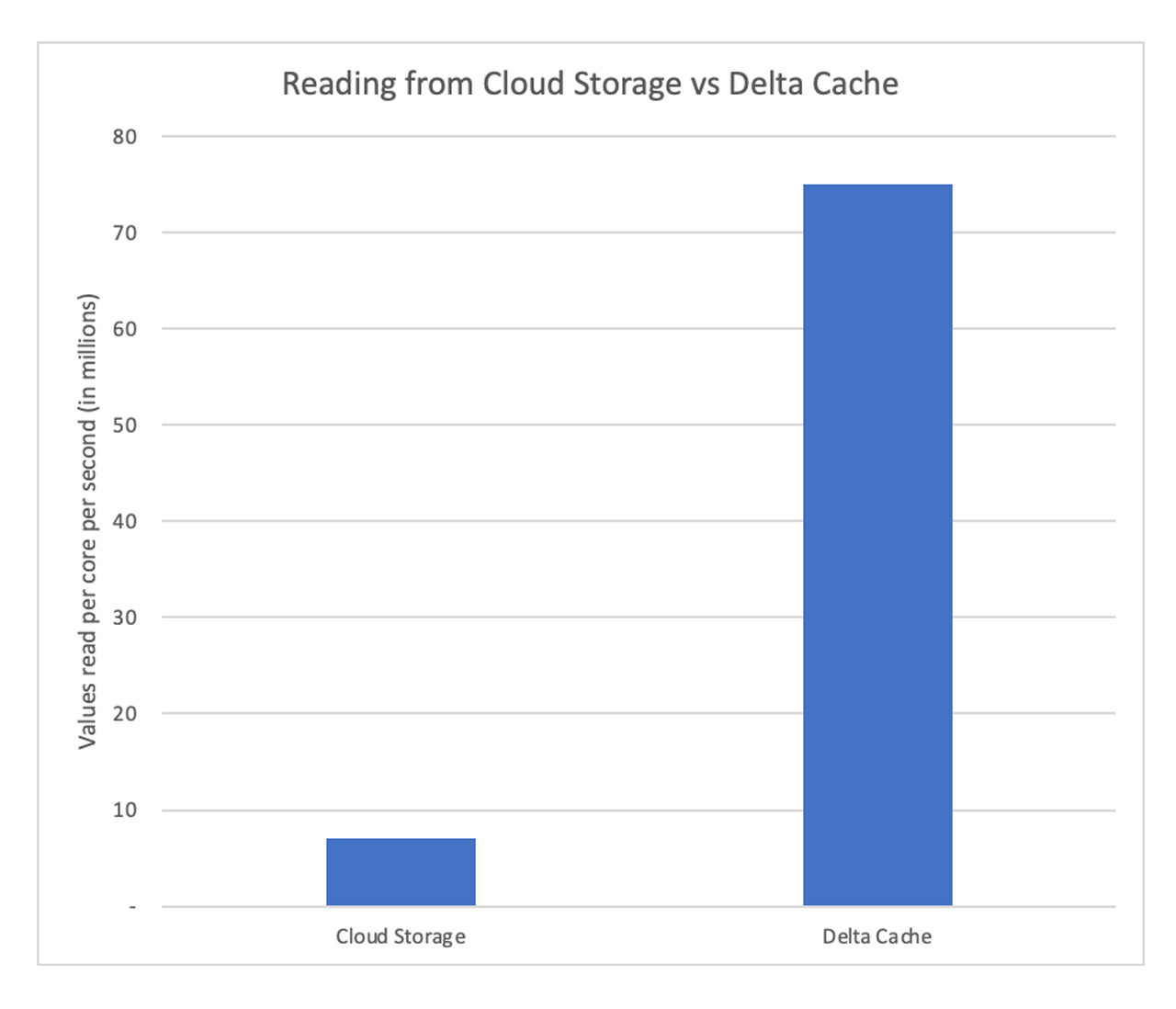

This will appear apparent, however you’d be stunned how many individuals are usually not utilizing the Delta Cache, which hundreds knowledge off of cloud storage (S3, ADLS) and retains it on the employees’ SSDs for sooner entry.

For those who’re utilizing Databricks SQL Endpoints you’re in luck. These have caching on by default. In truth, we suggest utilizing CACHE SELECT * FROM desk to preload your “sizzling” tables if you’re beginning an endpoint. This may guarantee blazing quick speeds for any queries on these tables.

For those who’re utilizing common clusters, make sure you use the i3 sequence on Amazon Net Companies (AWS), L sequence or E sequence on Azure Databricks, or n2 in GCP. These will all have quick SSDs and caching enabled by default.

In fact, your mileage might fluctuate. For those who’re doing BI, which includes studying the identical tables time and again, caching provides a tremendous enhance. Nonetheless, when you’re merely studying a desk as soon as and writing out the outcomes as in some ETL jobs, it’s possible you’ll not get a lot profit. You already know your jobs higher than anybody. Go forth and conquer.

5. Concentrate on lazy analysis

For those who’re a knowledge analyst or knowledge scientist solely utilizing SQL or doing BI you possibly can skip this part. Nonetheless, when you’re in knowledge engineering and writing pipelines or doing processing utilizing Databricks / Spark, learn on.

Once you’re writing Spark code like choose, groupBy, filter, and so on, you’re actually constructing an execution plan. You’ll discover the code returns virtually instantly if you run these capabilities. That’s as a result of it’s not truly doing any computation. So even when you’ve got petabytes of knowledge it should return in lower than a second.

Nonetheless, when you go to put in writing your outcomes out you’ll discover it takes longer. This is because of lazy analysis. It’s not till you attempt to show or write outcomes that your execution plan is definitely run.

—-------- # Construct an execution plan. # This returns in lower than a second however does no work df2 = (df .be part of(...) .choose(...) .filter(...) ) # Now run the execution plan to get outcomes df2.show() —------Nonetheless, there’s a catch right here. Each time you attempt to show or write out outcomes it runs the execution plan once more. Let’s have a look at the identical block of code however lengthen it and do just a few extra operations.

—-------- # Construct an execution plan. # This returns in lower than a second however does no work df2 = (df .be part of(...) .choose(...) .filter(...) ) # Now run the execution plan to get outcomes df2.show() # Sadly this can run the plan once more, together with filtering, becoming a member of, and so on df2.show() # So will this… df2.rely() —------The developer of this code might very effectively be pondering that they’re simply printing out outcomes thrice, however what they’re actually doing is kicking off the identical processing thrice. Oops. That’s lots of additional work. This can be a quite common mistake we run into. So why is there lazy analysis, and what will we do about it?

In brief, processing with lazy analysis is means sooner than with out it. Databricks / Spark appears to be like on the full execution plan and finds alternatives for optimization that may cut back processing time by orders of magnitude. In order that’s nice, however how will we keep away from the additional computation? The reply is fairly simple: save computed outcomes you’ll reuse.

Let’s have a look at the identical block of code once more, however this time let’s keep away from the recomputation:

# Construct an execution plan. # This returns in lower than a second however does no work df2 = (df .be part of(...) .choose(...) .filter(...) ) # reserve it df2.write.save(path) # load it again in df3 = spark.learn.load(path) # now use it df3.show() # this isn't doing any additional computation anymore. No joins, filtering, and so on. It’s already performed and saved. df3.show() # neither is this df3.rely()This works particularly effectively when Delta Caching is turned on. In brief, you profit significantly from lazy analysis, nevertheless it’s one thing lots of prospects journey over. So concentrate on its existence and save outcomes you reuse with a view to keep away from pointless computation.

Subsequent weblog: Design your tables effectively!

That is an extremely necessary subject, nevertheless it wants its personal weblog. Keep tuned. Within the meantime, take a look at this information on Delta Lake finest practices.

[ad_2]